Doc Searls recently brought my attention to a White Paper by Phil Windley, about his company, Kynetx. It does a good job explaining the thinking behind their architecture, and raises some questions that, for me, challenge some underlying assumptions and business choices.

Problem Domain

The distributed nature of the web is a big part of its power–nobody needs to ask permission from a central authority to use it or create with it. However, that disaggregation limits the cohesion for sophisticated uses, leaving users to hobble together ad-hoc mash-ups of value from multiple, diverse service providers.

For example, the average travel planner spends 29 days from their first query to their first purchase. No tool I know of facilitates that entire process effectively.

Solving this problem in a general way—while retaining the authority of the individual and the flexibility of open systems—is perhaps the greatest opportunity for VRM. The personal data store and VRM relationship services are two prongs of an architectural shift for enabling this kind of aggregation while remaining open. Once you put the user in the driver’s seat, with coherent controls over the flow and the data, the experience can integrate around the user, even as they drive anywhere on the Internet.

Solution

Kynetx’s solution is built on one primary capability:

A rules engine (and language) for contextual customization based on strong identity-based claims, using the user-centric Identity of Information Cards.

This puts Kynetx squarely in the web augmentation service business. Adaptive Blue (and their Glue product) is perhaps the most sophisticated approach to this space, but Yahoo’s Toolbar also augments web pages, as does Skype (putting its SkypOut button on any phone # it recognizes), and the granddaddies of all web-augmentation services are the ad blocker plug-ins that remove banner ads on websites.

I distinguish web augmentation from web media enhancements, like PDF and Flash and Java, in that the latter are embeddable or downloadable extensions to the core HTML/http architecture of the web, while augmentation services provide third-party manipulation of website presentation on behalf of the user. They actually tweak the web page as the user sees it, rather than offering websites a new way to package content or functionality.

Web augmentation isn’t new, but it is gaining adoption and breadth. There is a low-grade market war going on in this space. While browsers define the official battleground of the World Wide Web; augmentation services are the guerilla warriors of next generation browsing. The approach that reaches ubiquity first will create significant value throughout the architecture: for users, software vendors, and service providers.

So, the question that comes to my mind is where does Kynetx fit into all of this?

The value proposition of a rules-engine for customization is powerful, if that engine makes it easy to leverage strong identity. Every website will, imo, want to take advantage of the unique value of user-centric identity and Information Cards in particular. However, rewriting your customization to do that will take resources and that will slow adoption. If Kynetx can simplify how websites plug- in to the Identity meta-layer that sounds like a real value.

Gaps

There are however, several gaps that I see in Kynetix’s approach mapped out in the white paper.

First, who are the target developers: websites or Third party services. Or both?

It’s not clear to me if the primary authors of KRL rulesets (and hence Kynetx’s customers) will be the destination website developers or third party augmentation services. For example, . Adaptive Blue‘s Glue augments web pages so that things like movies can be recognized across domains for social commentary, ratings, and sharing. That means that Glue modifies the presentation of web pages at IMDB, Netflix, Amazon, Blockbuster, etc. In this pattern, it is the third-party, Glue, that would be running KRL rulesets, not the websites.

Is this the intended architecture for Kynetx? Is the point of the Kynetx Information Card to provide authorization by the user to allow services like Glue to augment their web experience, while the rest of the plug- in handles injection into the web page within the browser?

Or, is the main point that web services themselves would leverage Kynetx’s Information Card approach to manage third party identity for customization? For example, so Hertz could seamlessly provide AAA or AARP discounts if, and only if, the appropriate AAA or AARP information cards (KIX) are presented by the user? In this case, Hertz writes the customization, but doesn’t need to know upfront what the user’s affiliations might be.

If the first case is intended, the white paper doesn’t do a good job explaining how this fits into a larger, open ecosystem, nor does it highlight this unique architectural opportunity. If a user wants Orbitz to help augment its travel planning experience, even when it is at Expedia or Southwest airlines or Hilton.com, it would be great to do that in a secure, authorized, privacy-sensitive way. But it isn’t quite clear if this is the point of Kynetx’s approach. (Although it is a great opportunity, one that r-buttons and SwitchBook see in the not-so-far future).

If the second case is the goal, it isn’t clear to me why Kynetx is better than other customization frameworks. With a card selector and cards issued from the right authority, users can already present AAA or AARP credentials to websites, which in turn can integrate that information into their existing CMS or other presentation code (Drupal, PHP, perl, Ruby-on-Rails, etc.). If the value proposition is in speed-to-market for identity-based customization, then the white paper needs to make that case first and foremost. If that’s the goal, then it also suggests a business model, which I talk about in a bit.

It could also be that both of these are part of the approach: allowing both the website developer and third parties augment the web experience based on strong identity. This is the general idea behind r-buttons and would almost certainly speed deployment. However, the white paper doesn’t address the issues of contention when multiple providers want to augment the same page. Given the open-ended javascript functionality associated with a KIX, this could be a challenge.

Second, isn’t re-aggregation actually about creating a coherent context?

While the Kynetx approach allows users to present a particular relationship at a particular website, that doesn’t seem to solve the stated problem. I don’t see how it actually achieves a cross-web aggregated experience. In fact, it seems that the best aggregated experience should combine many relationship cards at many different services. In the 29-day travel planning scenario, won’t users need to send their AAA and AARP cards to every site they visit? (Or some large subset?) Does the card selector require a ceremony for every website every session? Or just once and then it is a permanent approval, such as confirming once with Expedia that the user is a AAA member? Managing this A x B complexity with A Information Cards and B websites scales poorly if every site has a distinct ceremony–and even worse if each card presented at each site is a distinct ceremony.

This apparent model of KIX based aggregation seems to miss an opportunity, one that is near to my heart as the core of the Search Map architecture for User-driven Search. It seems to me that for a given web-based task–such as travel planning–what you need is a user-driven personal data store that tracks the user’s progress across the Web. This data store should be 100% transparent, 100% editable, and seamlessly transferable/accessible to authorized vendors under terms controled by the user. We call our version of this a Search Map, an electronic document that provides the user a concrete way to manage and express their Search intent. It is also a seamless way to manage and express user context.

In the white paper, Phil asserts that “users are freed from managing episode context themselves” as a core benefit. But, I don’t think this is actually a benefit. Attempting to achieve that goal could end up being more patronizing than useful, following in the footsteps of “Clippy” the Microsoft Windows help agent which tried to figure out the context and help users, but failed miserably. “I see you are writing a letter. Would you like assistance?” Ack!

It’s not that users don’t want to manage their context, it’s that they haven’t been given simple, value-producing tools to do so. Consider spreadsheets: it’s not that users want to balance the budget on a computer—doing budgets on a computer isn’t inherently rewarding. It’s that spreadsheets make it easy to get value out of balancing their budget on the computer. Managing KIX across 29 days of travel planning and potentially a hundred+ websites sounds like a chore… unless we have a coherent expression of the context (in something like a Search Map, perhaps) that is easy to use and immediately useful.

Third, over-centralization limits scale.

The Kynetx model, as I understand it, doesn’t scale to the full World Wide Web, because it centralizes two core functions: resolving requests for augmentation and the validation of injection javascript as safe, private, and secure. Both of these constrain the growth opportunity for a KRL-based approach to augmenting web services. First, it places the core usage-time server demand on a single service. Given the business model of charging for ruleset evaluations, there is no obvious incentive for Kynetx to release an open source reference implementation to make it easier for alternate KRE service providers. In fact, there is every expectation that Kynetx will be motivated to “win the market share” battle and be the primary KRE service. Which, unfortunately, makes it just another silo, and will face precisely the same sort of scaling issues that plague Twitter. Second, by making Kinetx the arbiter of “quality” it places a single entity in control ofwhat constitutes “safe”. Even with good intentions, such centralized moral authority is not just dangerous, it alienates potential innovation. Nobody wants to be forced to seek permission for their new functionality. That was, IMO, the primary reason the World Wide Web dominated AOL so quickly.

The way to reach web scale is to make it absolutely trivial for /anyone/ to play the game. Several open source implementations and open standards enabled anyone who wanted to, to set up their own web server and try out the World Wide Web as a service provider. And, despite that lack of central control, lots of companies made lots of money providing enhanced software to manage those systems. So don’t fall for the illusion that central control is required or desirable for a big financial win.

Signing software is understood technology; we can enable signed KIX functionality with a validated identity as a first step towards quality control. Then, by opening up the validation service–and separating it from the distribution/matching of those KIX functions, we can allow software developers and service providers the freedom to innovate and provide their own approaches to what is valid and what isn’t. Some providers will choose to accept ANY signed KIX and simply track reputation. Others will charge a fee for developers, but run through a quality control check and review. By opening it up, you allow users and developers the freedom to manage KIX quality however they like, without building a presumptive “download at your own risk” ecosystem.

With Kynetx the sole authority on “quality” for KIX functionality, we would have both a technical and a political bottleneck that would retard the adoption of a generalized approach to the disaggregated web experience.

[Btw, it would be great if there were a name for the javascript injected into the browser when a KRL rule fires after evaluating the context and the user identity. This is currently just the “associated KIX functionality”, which is a bit wordy.]

Fourth, what about privacy and data rights management?

On the whole, it isn’t clear to me what data might be sent around in the claims of various Information Cards, but there is no discussion in the white paper about the data rights associated with that information. If I’m telling Hertz that I’m an AARP member, can they use that data to start sending me junk mail or SPAM targeting AARP members? Frankly, this is a hole in the entire user-centric Identity framework. OpenID Attribute Exchange and Information Cards allow users to use a third party service for the management and presentment of minimally sophisticated facets of identity (much better than username & password), but neither inherently enables users to specify a data rights regime for the claims or attributes so provisioned. In effect, we’ve made it easy for users to provide additional data about themselves, but missed the opportunity for users to easily control the use of that data.

Since Kynetx has a goal of seamlessly augmenting users’ web experience, isn’t it incumbant on them to assure that seamlessness both protects users’ right to privacy and prevents unintended over-customization based on supposedly private data? This is another manifestation of the “Tivo thinks I’m gay” problem, where Tivo analyzes viewing behavior and assumes things about the user, with no way for the user to manage their profile. The data rights problem happens because there is nothing to keep Tivo from telling Hertz, GE, or NBC they think the user is gay. The problem in the Kynetx approach happens when service providers start passing presumably private data to third parties—and users lack the means to control that leakage once the service provider knows certain data. This level of data rights control needs to be built in from the start for VRM and user-driven applications.

Business Model

At the core, I think the business model needs rethinking. Although a CPM-based pricing for KRL evaluations seems to align the value proposition directly with costs, it actually presents more risk and less control to potential customers than other models. It also presents greater risk and less stability for Kynetx itself.

What service providers and developers want to see in a technology platform is one with a free entry point (so you can get testing and trying it ASAP, even if a production system would need a for-fee license), a constrained, predictable cost structure, and economies of scale. Charging per evaluation offers none of these.

This model instead creates an artificial scarcity and then charges by the drop. What you want is to create abundance and sell buckets and hoses and pumps. Doc calls this the “because of” effect. Constraining KRL evaluation to support a pay-by-drink business model will artificially constrain adoption. Instead, run to ubiquity and sell the best tools for leveraging the system you’ve helped create.

At the same time, the evaluation of rulesets will have highly variable demand, with great spikes and drops far outside of Kynetx’s control. Tying revenue to that demand volatility means an unpredictable, wild revenue profile, flattening out only with insanely large numbers of users. This works for mega services like Amazon Web Services, but for a start up moving from initial revenue to predictable cash flow, it can be unsettling. In contrast, an IDE sales model or subscription based service with monthly fees bounds developer expenses and stabilizes the revenue curve.

I like the idea of KRL rulesets. Currently, SwitchBook is planning on using Javascript, RegEx, and XPath, for similar evaluations. That approach not only feels ad-hoc, it is. I’d like to see a unified approach that is flexible, cross-platform, and supported by a good development and test environment.

I think Kynetx could go far by creating an open source platform for KRL rulesets, then providing a robust IDE and testing framework for those who want to manage KRL rules to meet business needs. I think this is nicely pointed to in the mention in the White Paper of A/B testing with different KRLs. This is precisely the kind of sophistication that businesses will need to make the most of KRLs and which can easily be separated from the core infrastructure that enables KRLs in an open way for everybody. Also, the consulting opportunities to analyze, customize, and manage KRL rulesets is a huge business opportunity. Doing that well is likely to remain a black art for a long time to come; helping Fortune 1000 companies do it well should be lucrative.

As Dale Olds put it referring to Novel’s Bandit Project: First, enable an open identity-metasystem, then sell tools to companies to help them manage it.

Collaborations

I like the value proposition of platform-independent identity-based customization. It fits well with VRM’s r-buttons, MyDex’s Personal Data Store service, and SwitchBook’s Search Maps. I think there’s still some brain work to be done figuring out how we can all support each other and simultaneously build sustainable business models, but I’ve no doubt there’s a way if we all invest in exploring those opportunities. Although I focused on questions and concerns about Kynetx in this post, I have great respect for Phil and hope to work with him as both our companies–and the entire VRM community–build out viable solutions to these kinds of problems.

![]() There is a low grade market war going on in web augmentation services, part of a huge shift in how developers and users perceive the web.

There is a low grade market war going on in web augmentation services, part of a huge shift in how developers and users perceive the web. The mashup/API culture knows that not every website can be the best at everything. Instead, mash up those that are the best into custom-combined web pages. The shift away from monolithic webservices began here, applying applied multi-source content to a centralized experience. It was still predicated on users visiting a central website, but it was a start at redefining the perspective from which the web should be constructed.

The mashup/API culture knows that not every website can be the best at everything. Instead, mash up those that are the best into custom-combined web pages. The shift away from monolithic webservices began here, applying applied multi-source content to a centralized experience. It was still predicated on users visiting a central website, but it was a start at redefining the perspective from which the web should be constructed. It comes down to a question of open systems. Open systems that work, work when everyone does it, because that’s where you get game-changing economies of scale. The network effect only happens if the value of the system increases when more and more people use it and open systems are all about the network effect.

It comes down to a question of open systems. Open systems that work, work when everyone does it, because that’s where you get game-changing economies of scale. The network effect only happens if the value of the system increases when more and more people use it and open systems are all about the network effect. What if every company that wanted to “augment” your web experience started inserting content, buttons, and javascript into web pages? Even assuming the augmentation is only done by those services you trust and appreciate—just those companies or organizations or movements that you want to help you—if we restrict the augmentation to just those firms, we still have a veritable cacophony of conflicting augmentations. What happens when your library, Borders.com, your favorite local bookstore, all want to “augment” a listing of 1984 by George Orwell? And I haven’t event started on the list of folks wanting to tell you about movie versions, plays, online videos, derivative works, Wikipedia articles, and discussion groups about the book.

What if every company that wanted to “augment” your web experience started inserting content, buttons, and javascript into web pages? Even assuming the augmentation is only done by those services you trust and appreciate—just those companies or organizations or movements that you want to help you—if we restrict the augmentation to just those firms, we still have a veritable cacophony of conflicting augmentations. What happens when your library, Borders.com, your favorite local bookstore, all want to “augment” a listing of 1984 by George Orwell? And I haven’t event started on the list of folks wanting to tell you about movie versions, plays, online videos, derivative works, Wikipedia articles, and discussion groups about the book. Being a good netizen requires thinking about these issues, just as being a good citizen means thinking about how private actions affect the public good. To build out this next generation of identity-enabled web augmentation services, we would all do well to think through what happens when everyone does it.

Being a good netizen requires thinking about these issues, just as being a good citizen means thinking about how private actions affect the public good. To build out this next generation of identity-enabled web augmentation services, we would all do well to think through what happens when everyone does it. We (SwitchBook) haven’t begun to solve that problem, but we look forward to working with the rest of the open community to figure out how to make it work. At the end of the day, the collection of interfaces and services that provide the most value to users is going to win. Everything between here and there is just wasted development dollars, even if it generates millions in profits for those fighting the tide.

We (SwitchBook) haven’t begun to solve that problem, but we look forward to working with the rest of the open community to figure out how to make it work. At the end of the day, the collection of interfaces and services that provide the most value to users is going to win. Everything between here and there is just wasted development dollars, even if it generates millions in profits for those fighting the tide.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=7c2863ae-f7c3-4fd1-b6aa-2af2313a7118)

Google Notebook fit a unique spot in the Google product portfolio, and as you can see in the

Google Notebook fit a unique spot in the Google product portfolio, and as you can see in the  It is an interesting experiment, if only because it shows how seriously Google takes Wikipedia as competition; the functionality is nearly identical to Wikipedia’s search engine,

It is an interesting experiment, if only because it shows how seriously Google takes Wikipedia as competition; the functionality is nearly identical to Wikipedia’s search engine,  For example, let’s say you search Google for “travel” and delete Travelocity, Expedia, and CheapTickets, because you’ve already tried those sites and are looking for something new. Then after browsing a bit, you realize you want to see websites for air travel, so you change the query to “air travel”. Suprise! All those results you deleted are back in the list.

For example, let’s say you search Google for “travel” and delete Travelocity, Expedia, and CheapTickets, because you’ve already tried those sites and are looking for something new. Then after browsing a bit, you realize you want to see websites for air travel, so you change the query to “air travel”. Suprise! All those results you deleted are back in the list. Search Maps put the user in charge of all the data related to their Search. Search Maps enable true

Search Maps put the user in charge of all the data related to their Search. Search Maps enable true  User Driven Search is more than what we type into the query box and the results we get back from Search Engines. It covers an entire set of activities that span the Internet, including searches entered at site-specific Search Providers like

User Driven Search is more than what we type into the query box and the results we get back from Search Engines. It covers an entire set of activities that span the Internet, including searches entered at site-specific Search Providers like  There are ways to

There are ways to  When treating Searches that span more than single queries, users need to be able to separate them into their natural topical breakdown, in whatever way makes sense. Collecting our entire search history and/or clickstream into a

When treating Searches that span more than single queries, users need to be able to separate them into their natural topical breakdown, in whatever way makes sense. Collecting our entire search history and/or clickstream into a  Currently, Google, and its DoubleClick division, track your entire search history and just about anywhere you might go online, yet you have no idea what information they have on you, except for Google’s Search History—and you certainly can’t edit it. So when you track something down on a lark, or someone else uses your machine, irrelevant data gets bundled into your history, only to clog up the machinery that is actually trying to help you. Buy a book on knots for your young cousin and Amazon will be recommending Boy Scout titles for months. This is sometimes referred to as the “Tivo thinks I’m gay” problem. If users have neither visibility nor control over the data used for recommendations, they can’t correct these types of errors. We must have both visibility into the data driving advertising and search results, and we must be able to edit it as well.

Currently, Google, and its DoubleClick division, track your entire search history and just about anywhere you might go online, yet you have no idea what information they have on you, except for Google’s Search History—and you certainly can’t edit it. So when you track something down on a lark, or someone else uses your machine, irrelevant data gets bundled into your history, only to clog up the machinery that is actually trying to help you. Buy a book on knots for your young cousin and Amazon will be recommending Boy Scout titles for months. This is sometimes referred to as the “Tivo thinks I’m gay” problem. If users have neither visibility nor control over the data used for recommendations, they can’t correct these types of errors. We must have both visibility into the data driving advertising and search results, and we must be able to edit it as well. Recommendation systems presume that an analysis of your history is the best way to discover what you might want now. The

Recommendation systems presume that an analysis of your history is the best way to discover what you might want now. The  At the

At the  Not just tuna salad

Not just tuna salad Google

Google The limits on the user-driven aspects of Google are particularly ironic given that it is precisely the element of user control that creates Google’s greatest asset: focused attention.

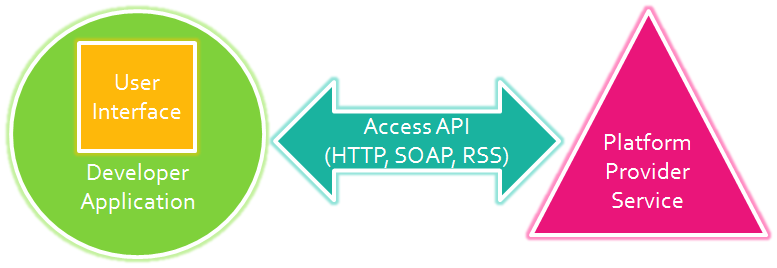

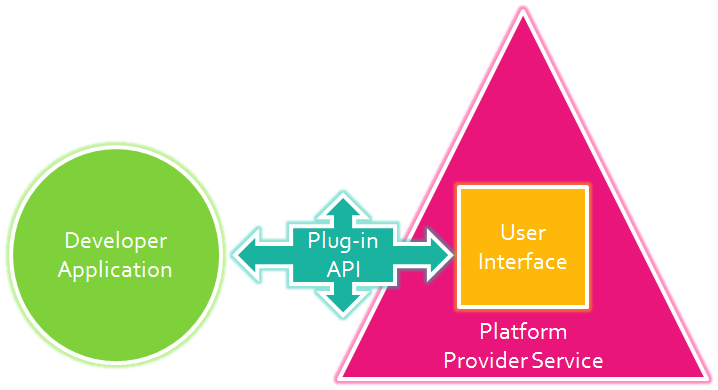

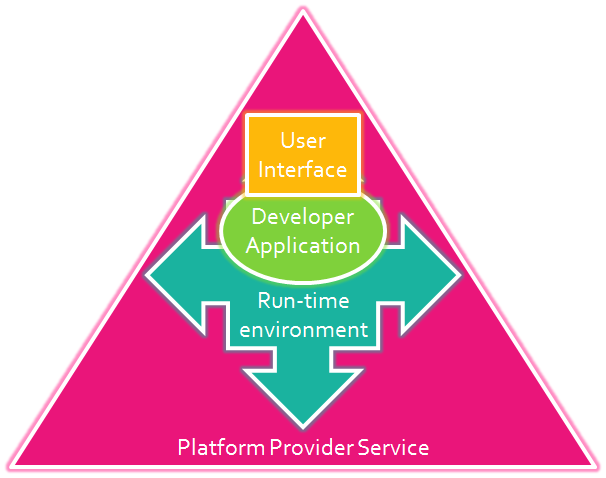

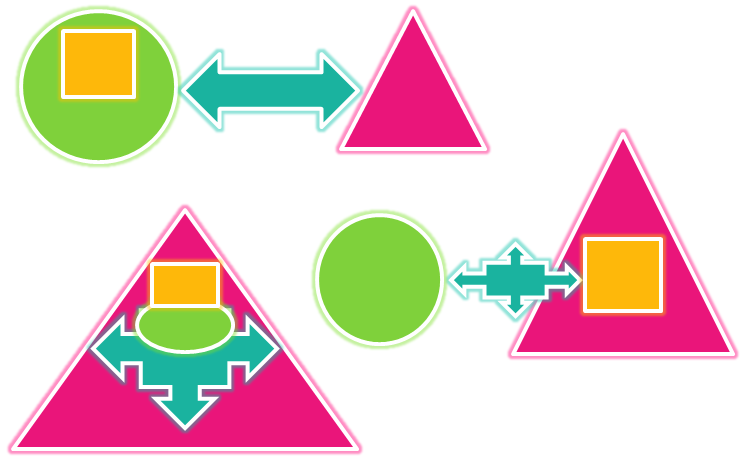

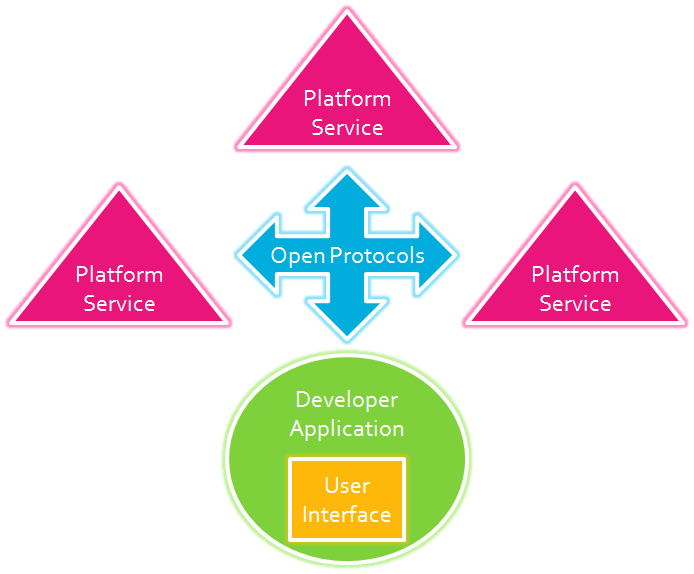

The limits on the user-driven aspects of Google are particularly ironic given that it is precisely the element of user control that creates Google’s greatest asset: focused attention. User Interface

User Interface Developer Application

Developer Application Platform Enabler

Platform Enabler Platform Service Provider

Platform Service Provider